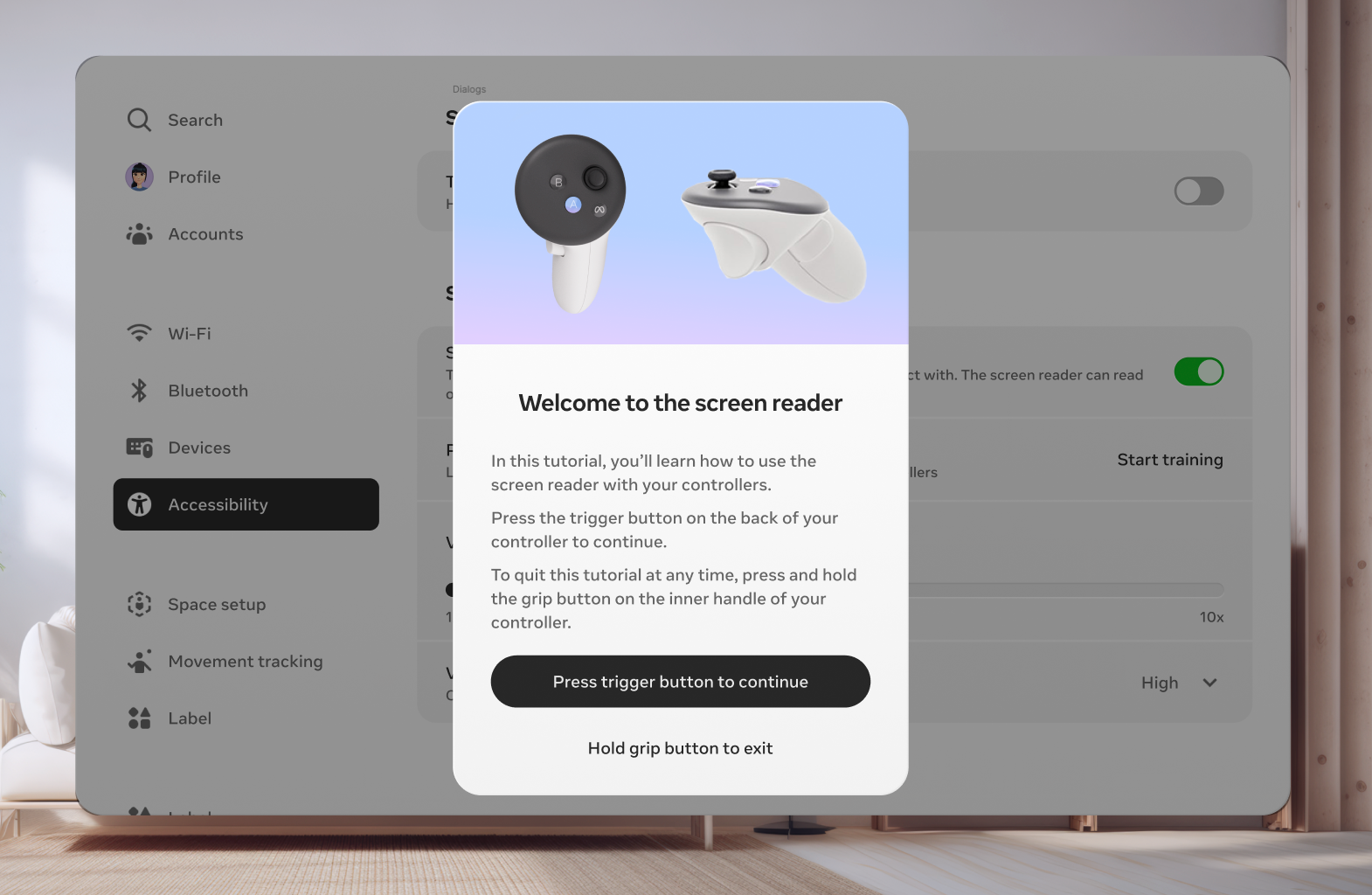

Screen Reader ON META QUEST

With the announcement of the European Accessibility Act (EAA), Meta realized that they needed to get all of their devices up to accessibility standards, fast. The most glaring issue was that their Meta Quest headsets were entirely inaccessible for blind and low vision (BLV) users.

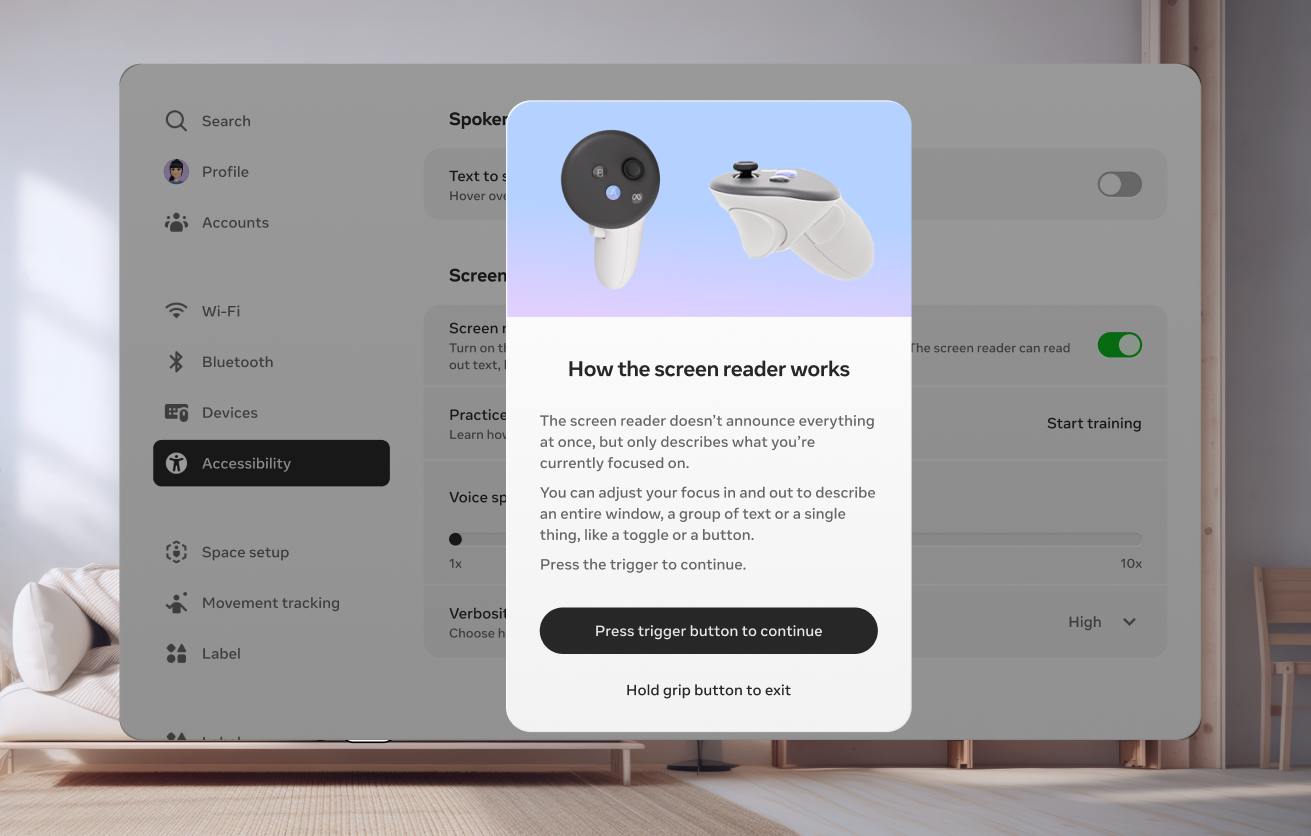

I volunteered to split my time with the accessibility org to lead content design and product strategy for a first-of-its-kind VR screen reader, which could audibly describe content and interactive UX components across the operating system.

We redesigned interaction paradigms from the ground up, adding new remapped control scheme, haptic and auditory feedback cues, and streamlined flows for high-friction interactions (like typing and text entry) to serve the unique needs for our new BLV audience.

I built an exhaustive slate of automated scripts for how the screen reader announces every single component type in our operating system, based on design patterns and product context. I then scaled these hundreds of scripts with internal AI tooling to cover needs across our OS, saving hundreds of hours of manual content design support.

Launched in June 2025, the screen reader made VR accessible overnight for entire communities who were completely unable to use it before. Not to mention that achieving EAA compliance before targeted deadlines saved the company $500M+ in identified fines.

Despite not being the splashiest product launch, this is one of my proudest projects I’ve been a part of.